Data Pipeline Orchestration on Steroids: Getting Started with Apache Airflow, Part 1

27 Jun 2020 Source: Unsplash### What is Apache Airflow?

Source: Unsplash### What is Apache Airflow?

Source: UnsplashApache Airflow is an open source data workflow management project originally created at AirBnb in 2014. In terms of data workflows it covers, we can think about the following sample use cases:

Source: UnsplashApache Airflow is an open source data workflow management project originally created at AirBnb in 2014. In terms of data workflows it covers, we can think about the following sample use cases:

🚀 Automate Training, Testing and Deploying a Machine Learning Model

🍽 Ingesting Data from Multiple REST APIs

As we can see, one of the main features of Airflow is its flexibility: it can be used for many different data workflow scenarios. Due to this aspect and to its rich feature set, it has gained significant traction over the years, having been battle tested in many companies, from startups to Fortune 500 enterprises. Some examples include Spotify, Twitter, Walmart, Slack, Robinhood, Reddit, PayPal, Lyft, and of course, AirBnb.

OK, But Why?

Feature Set

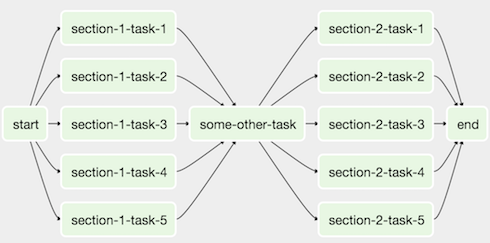

- Apache Airflow works with the concept of Directed Acyclic Graphs (DAGs), which are a powerful way of defining dependencies across different types of tasks. In Apache Airflow, DAGs are developed in Python, which unlocks many interesting features from software engineering: modularity, reusability, readability, among others.

Source: Apache Airflow Documentation* Sensors, Hooksand Operatorsare the main building blocks of Apache Airflow. They provide an easy and flexible way of connecting to multiple external resources. Want to integrate with Amazon S3? Maybe you also want to add Azure Container Instances to the picture in order to run some short-lived Docker containers? Perhaps running a batch workload in an Apache Spark or Databricks cluster? Or maybe just executing some basic Python code to connect with REST APIs? Airflow ships with multiple operators, hooks and sensors out of the box, which allow for easy integration with these resources, and many more, such as: DockerOperator, BashOperator, HiveOperator, JDBCOperator— the list goes on.You can also build upon one of the standard operators and create your own. Or you can simply write your own operators, hooks and sensors from scratch.

Source: Apache Airflow Documentation* Sensors, Hooksand Operatorsare the main building blocks of Apache Airflow. They provide an easy and flexible way of connecting to multiple external resources. Want to integrate with Amazon S3? Maybe you also want to add Azure Container Instances to the picture in order to run some short-lived Docker containers? Perhaps running a batch workload in an Apache Spark or Databricks cluster? Or maybe just executing some basic Python code to connect with REST APIs? Airflow ships with multiple operators, hooks and sensors out of the box, which allow for easy integration with these resources, and many more, such as: DockerOperator, BashOperator, HiveOperator, JDBCOperator— the list goes on.You can also build upon one of the standard operators and create your own. Or you can simply write your own operators, hooks and sensors from scratch.

- The UI allows for quick and easy monitoring and management of your Airflow instance. Detailed logs also make it easier to debug your DAGs.

Airflow UI Tree View. Source: Airflow Documentation…and there are many more. Personally, I believe one of the fun parts of working with Airflow is discovering new and exciting features as you use it — and if you miss something, you might as well create it.

Airflow UI Tree View. Source: Airflow Documentation…and there are many more. Personally, I believe one of the fun parts of working with Airflow is discovering new and exciting features as you use it — and if you miss something, you might as well create it.

- It is part of the Apache Foundation, and the community behind it is pretty active — currently there are more than a hundred direct contributors. One might argue that Open Source projects always run the risk of dying at some point — but with a vibrant developer community we can say this risk is mitigated. In fact, 2020 has seen individual contributions for Airflow at an all-time high.

Apache Airflow Tutorial

Time to get our hands dirty and actually start with the tutorial.

There are multiple ways of installing Apache Airflow. In this introduction we will cover the easiest one, which is by installing it from the PyPi repository.

Basic Requirements

- Python 3.6+

- Pip

- Linux/Mac OS — for those running Windows, activate and installWindows Subsystem for Linux (WSL), download Ubuntu 18 LTS from the Windows Marketplace and be happy :)

Initial Setup

- Create a new directory for your Airflow project (e.g. “airflow-intro”)

- From your new directory, create and activate a new virtual environment for your Airflow project using venv

# Run this from newly created directory to create the venv

python3 -m venv venv

# Activate your venv

source venv/bin/activate

- Install apache-airflow through pip

pip install apache-airflow

Before proceeding, it is important to discuss a bit about Airflow’s main component: the Executor. The name is pretty self-explanatory: this component handles the coordination and execution of different tasks across multiple DAGs.

There are many types of Executors in Apache Airflow, such as the SequentialExecutor, LocalExecutor, CeleryExecutor, DaskExecutor and others. For the sake of this tutorial, we will focus on the SequentialExecutor. It presents very basic functionality and has a main limitation, which is the fact that it cannot execute tasks in parallel. This is due to the fact that it leverages a SQLite database as the backend (which can only handle one connection at a time), hence multithreading is not supported. Therefore it is not recommended for a Production setup, but it should not be an issue for our case.

Going back to our example, we need to initialize our backend database. But before that, we must override our AIRFLOW_HOME environment variable, so that we specify that our current directory will be used for running Airflow.

export AIRFLOW\_HOME=$(pwd)

Now we can initialize our Airflow database. We can do this by simply executing the following:

airflow initdb

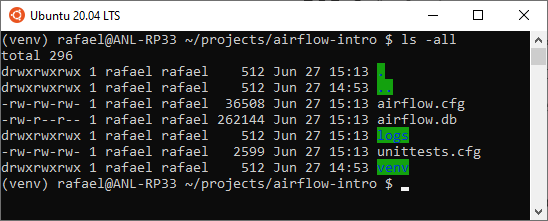

Take a look at the output and make sure that no error messages are displayed. If everything went well, you should now see the following files in your directory:

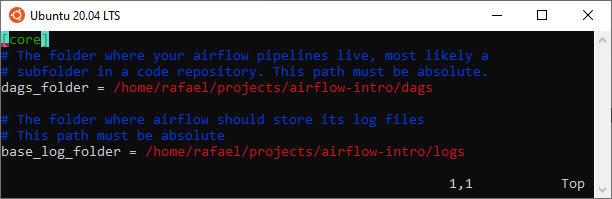

* To confirm if the initialization is correct, quickly inspect airflow.cfg and confirm if the following lines correctly point to your work directory in the [core] section. If they do, you should be good to go.

* To confirm if the initialization is correct, quickly inspect airflow.cfg and confirm if the following lines correctly point to your work directory in the [core] section. If they do, you should be good to go.

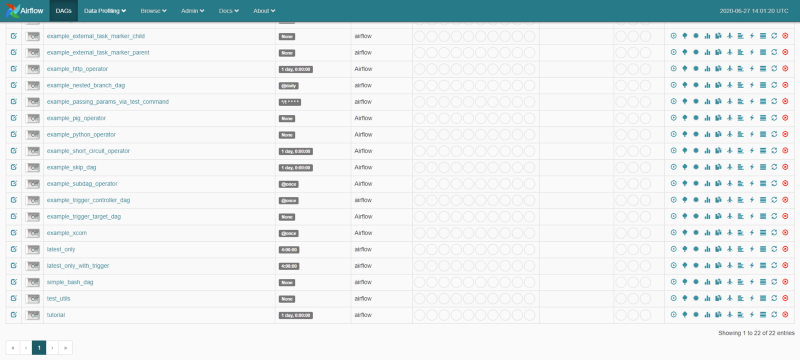

Optional: Airflow ships with many sample DAGs, which might help you get up to speed with how they work. While it is certainly helpful, it can make your UI convoluted. You can set load_examples to False, so that you will see only your own DAGs in the Airflow’s UI.

Optional: Airflow ships with many sample DAGs, which might help you get up to speed with how they work. While it is certainly helpful, it can make your UI convoluted. You can set load_examples to False, so that you will see only your own DAGs in the Airflow’s UI.

DAG Creation

We will start with a really basic DAG, which will do two simple tasks:

- Create a text file

- Rename this text file

To get started, create a new Python script file named simple_bash_dag.py inside your dags folder. In this script, we must first import some modules:

# Python standard modules

from datetime import datetime, timedelta

# Airflow modules

from airflow import DAG

from airflow.operators.bash\_operator import BashOperator

We now proceed to creating a DAG object. In order to do that, we must specify some basic parameters, such as: when will it become active, which intervals do we want it to run, how many retries should be made in case any of its tasks fail, and others. So let’s define these parameters:

default\_args = {

'owner': 'airflow',

'depends\_on\_past': False,

# Start on 27th of June, 2020

'start\_date': datetime(2020, 6, 27),

'email': ['airflow@example.com'],

'email\_on\_failure': False,

'email\_on\_retry': False,

# In case of errors, do one retry

'retries': 1,

# Do the retry with 30 seconds delay after the error

'retry\_delay': timedelta(seconds=30),

# Run once every 15 minutes

'schedule\_interval': '\*/15 \* \* \* \*'

}

We have defined our parameters. Now it is time to actually tell our DAG what it is supposed to do. We do this by declaring different tasks — T1 and T2. We must also define which task depends on the other.

with DAG(

dag\_id=’simple\_bash\_dag’,

default\_args=default\_args,

schedule\_interval=None,

tags=[‘my\_dags’],

) as dag:

#Here we define our first task

t1 = BashOperator(bash\_command=”touch ~/my\_bash\_file.txt”, task\_id=”create\_file”)

#Here we define our second task

t2 = BashOperator(bash\_command=”mv ~/my\_bash\_file.txt ~/my\_bash\_file\_changed.txt”, task\_id=”change\_file\_name”)

# Configure T2 to be dependent on T1’s execution

t1 >> t2

And as simple as that, we have finished creating our DAG 🎉

Testing our DAG

Let’s see how our DAG looks like and most importantly, see if it works.

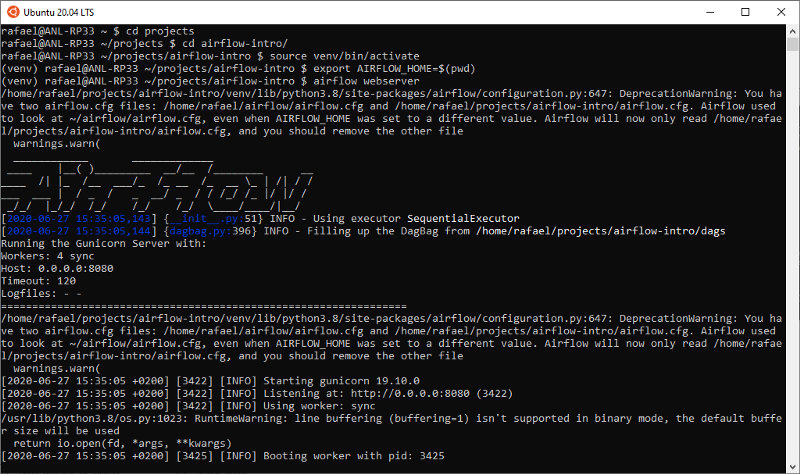

To do this, we must start two Airflow components:

- The Scheduler, which controls the flow of our DAGs

airflow scheduler

- The Web Server, an UI which allows us to control and monitor our DAGs

airflow webserver

You should see the following outputs (or at least something similar):

Output for the Scheduler’s startup

Output for the Scheduler’s startup Output for the Webserver’s startup#### Showtime

Output for the Webserver’s startup#### Showtime

We should now be ready to look at our Airflow UI and test our DAG.

Just fire up your navigator and go to https://localhost:8080. Once you hit Enter, the Airflow UI should be displayed.

Look for our DAG — simple_bash_dag — and click on the button to its left, so that it is activated. Last, on the right hand side, click on the play button ▶ to trigger the DAG manually.

Look for our DAG — simple_bash_dag — and click on the button to its left, so that it is activated. Last, on the right hand side, click on the play button ▶ to trigger the DAG manually.

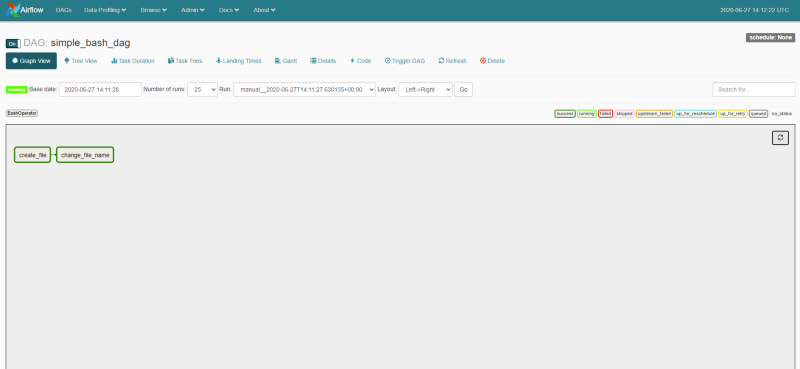

Clicking on the DAG enables us to see the status of the latest runs. If we click on the Graph View, we should see a graphical representation of our DAG — along with the color codes indicating the execution status for each task.

As we can see, our DAG has run successfully 🍾

As we can see, our DAG has run successfully 🍾

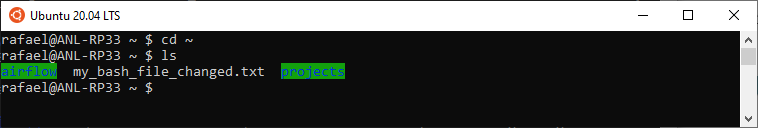

We can also confirm that by looking at our home directory:

### Wrap Up

### Wrap Up

- We had a quick tutorial about Apache Airflow, how it is used in different companies and how it can help us in setting up different types of data pipelines

- We were able to install, setup and run a simple Airflow environment using a SQLite backend and the SequentialExecutor

- We used the BashOperator to run simple file creation and manipulation logic

There are many nice things you can do with Apache Airflow, and I hope my post helped you get started. If you have any questions or suggestions, feel free to comment.

You might also like some of my other stories:

Keeping Your Machine Learning Models on the Right Track: Getting Started with MLflow, Part 2

Learn how to use MLflow Model Registry to track, register and deploy Machine Learning Models effectively.mlopshowto.comKeeping Your Machine Learning Models on the Right Track: Getting Started with MLflow, Part 1

Learn why Model Tracking and MLflow are critical for a successful machine learning projectmlopshowto.comAn Apache Airflow MVP: Complete Guide for a Basic Production Installation Using LocalExecutor

Simple and quick way to bootstrap Airflow in productiontowardsdatascience.co